Apple announced new efforts to help find child sexual abuse material (CSAM) on customers’ devices. However, there are some privacy concerns that concern the possible expansion of scanning devices for much more than just CSAM.

New child safety feature will be introduced in three areas. The first area would be new communication tools to enable parents to play a more informed role involving their kids’ online communications.

The second area will have iOS and iPadOS use new applications of cryptography to detect CSAM so that Apple could provide information to law enforcement. And the third area updating Siri and Search to provide parents and kids expanded information and help if they encounter unsafe situations.

“At Apple, our goal is to create technology that empowers people and enriches their lives—while helping them stay safe. We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of (CSAM),” said Apple.

Communication safety

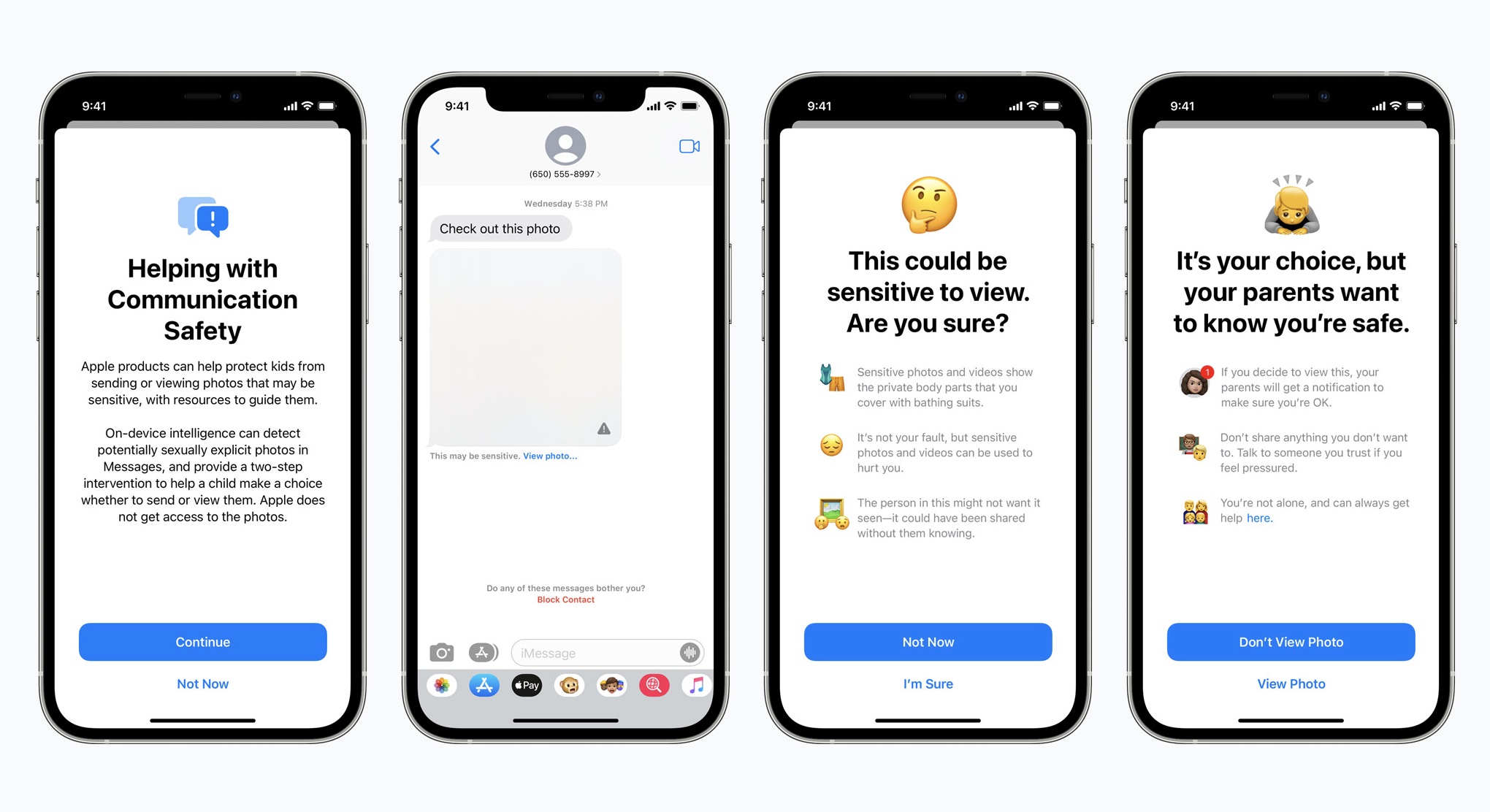

For the new communication tools, Apple will add new tools to the Messages app to warn children and their parents when receiving or sending sexually explicit photos. For example, if they were to receive a sexually explicit photo, the photo itself would be blurred and the child would be warned—and they would be reassured it is okay if they do not want to view the photo.

As an additional precaution, the child can also be told that their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos—as the child will be warned before the photo is sent, and the parents would receive a message if the child chooses to send it.

The feature is designed by a machine learning program used to analyse image attachments which will determine if a photo is sexually explicit. It is also designed “so that Apple does not get access to the messages”.

The technology is coming out “later this year to accounts set up as families in iCloud” in updates to iOS 15, iPadOS 15, and macOS Monterey.

CSAM detection

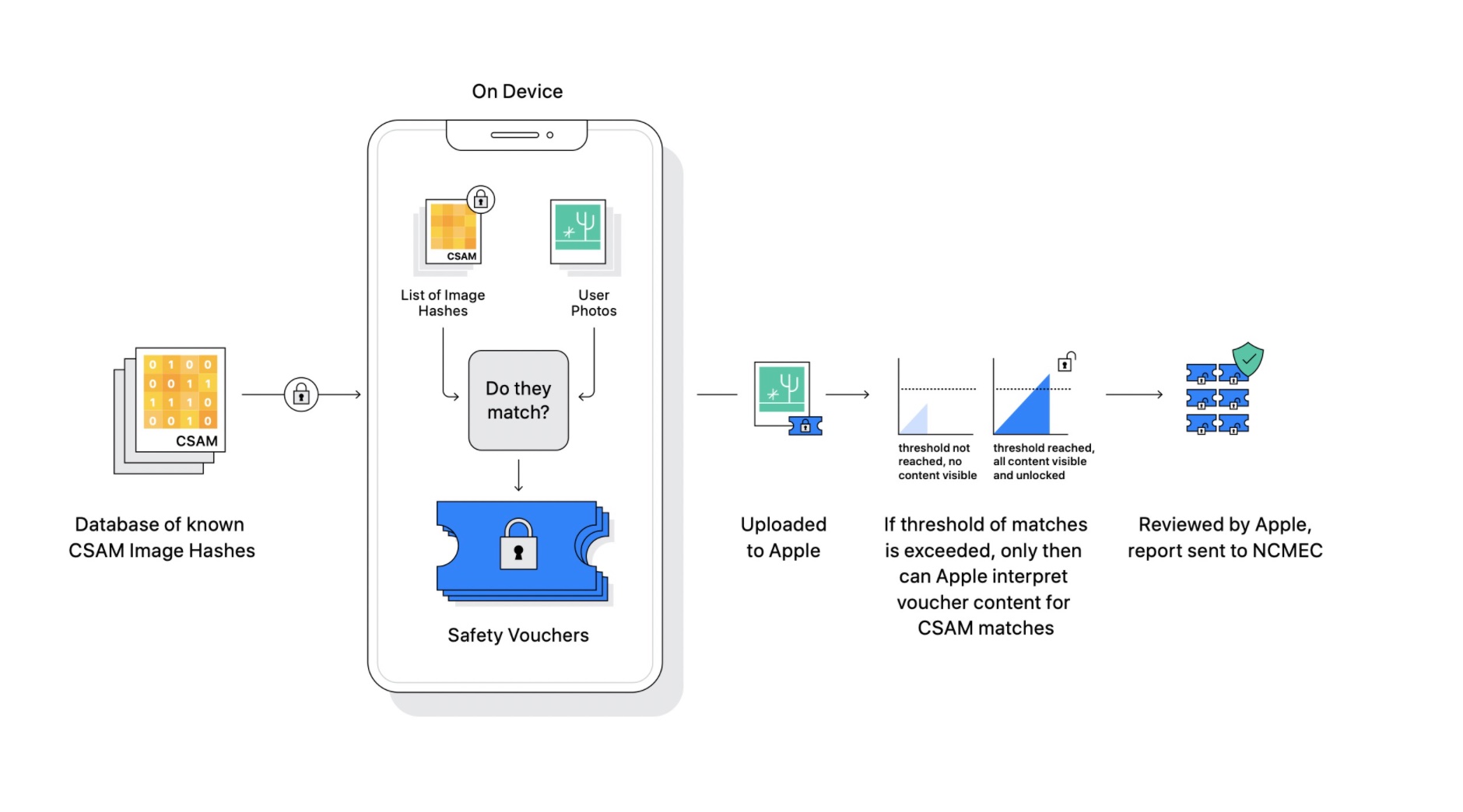

Apple will also be able to detect known CSAM images when they are stored in iCloud Photos with “new technology in iOS and iPadOS”. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organisations.

“Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result,” explained Apple.

All of the matching is done on device and will turn into an “unreadable set of hashes that is securely stored on users’ devices.” Apple repeatedly mentioned that its method of detecting CSAM is “designed with user privacy in mind”.

Despite “privacy in mind”, Apple would be able to manually review the photos if they “cross a threshold of known CSAM content”. Once they confirm of a match, they can disable a user’s account and sends a report to NCMEC. If a user feels their account has been mistakenly flagged they can file an appeal to have their account reinstated.

Siri and Search

Apple will also be expanding guidance for Siri and Search by providing “additional resources to help children and parents stay safe online”. For example, users would be able to ask Siri how they can report CSAM or child exploitation, and they would be pointed to resources for where and how to file a report.

“Siri and Search are also being updated to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue,” explained Apple.

Like the technology for the Messages app, the updates to Siri and Search are also coming later this year in an update to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey. Even though these new updates will benefit potential threats to child abuse, there are still concerns to do with privacy.

Some worry that the technology could be expanded to scan phones for prohibited content or even political speech. It could especially be used by authoritarian governments to spy on its citizens.

“Regardless of what Apple’s long term plans are, they’ve sent a very clear signal. In their (very influential) opinion, it is safe to build systems that scan users’ phones for prohibited content. Whether they turn out to be right or wrong on that point hardly matters. This will break the dam—governments will demand it from everyone,” said Matthew Green, a security researcher at Johns Hopkins University.

Apple has given users “a better understanding of how their data is tracked” by third-party companies across various apps and websites. Their “A Day in the Life of Your Data” report explains how security features on Apple’s products offer “transparency and control”, while offering advice on how to protect your personal information.

[ SOURCE ]