NVIDIA unveiled some interesting new technology at Computex 2023—one that leverages on the growing trend of generative AI and brings it into games. Its Avatar Cloud Engine (ACE) will soon let developers create characters that will literally respond to the player’s natural speech in real time.

The company says ACE for Games can be used to “build and deploy customised speech, conversation and animation AI models,” utilising several of its technologies. These include NVIDIA NeMo for building custom conversational AI, Riva for automatic speech recognition and text-to-speech and Omniverse Audio2Face to match facial expressions to the resulting generated speech.

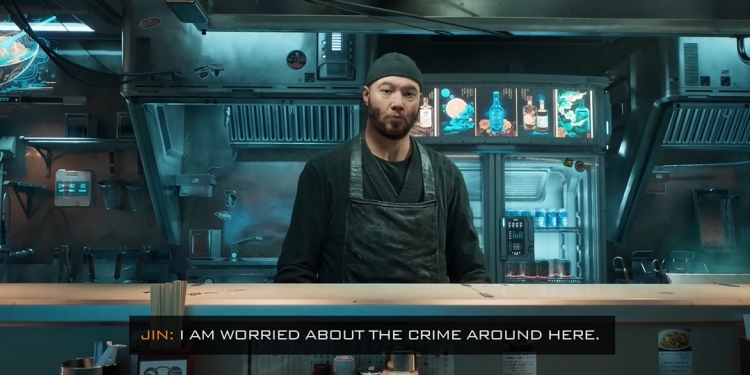

Nvidia showcased those technologies in a demo it calls Kairos, in which a player interfaces with Jin, a proprietor of a ramen shop set in a Cyberpunk 2077-esque universe. By pressing and holding on a key, the said player is able to speak to Jin, who then tells him about his plight and, when asked if he needed help, names the person the player is supposed to look for and where to find him.

During his keynote, NVIDIA CEO Jensen Huang said the creators of the demo simply needed to feed Jin’s generative AI with his back story and game’s lore. The company’s GeForce Platform vice president Jason Paul also told The Verge that ACE for Games can scale to more than one character at a time and can theoretically even let characters talk to each other, though he hasn’t actually seen it tested.

It’s clear the technology isn’t quite finished—Jin sounds stilted, especially compared to what ChatGPT is capable of these days, and his facial animations still look a little stiff and robotic. But it is a fascinating glimpse into the future of video games, one where players can freely talk to characters, leading to endless storytelling possibilities.