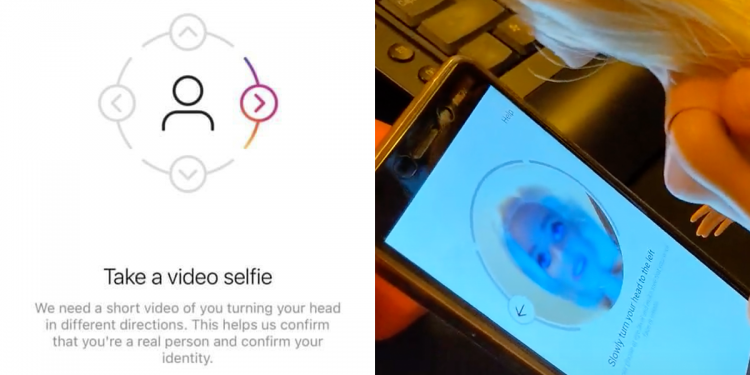

Instagram was found requesting users to submit video selfies to verify that they are a real human, however it was found to be easily fooled by simply using a Barbie doll.

The video selfie verification system was recently spotted by social media consultant Matt Navarra, but it actually showed up for some users last year.

Instagram is now using video selfies to confirm users identity

— Matt Navarra (I quit X. Follow me on Threads) (@MattNavarra) November 15, 2021

Meta promises not to collect biometric data. pic.twitter.com/FNT2AdW8H2

A Reddit post on the Instagram subreddit detailed a user having trouble with the video selfie feature, with many others having the same problem in the comment section. It seems like Instagram took the feature down due to technical difficulties and put an improved version back up a year later. In the post, users were having the verification page show up after getting their account disabled, but this new version reportedly only shows up when you make a new account.

I tried making a new Instagram account with suspicious details, however Instagram did not ask me for a video selfie. This might just be because the feature is not fully rolled out to everyone yet.

How does Instagram video selfie verification work?

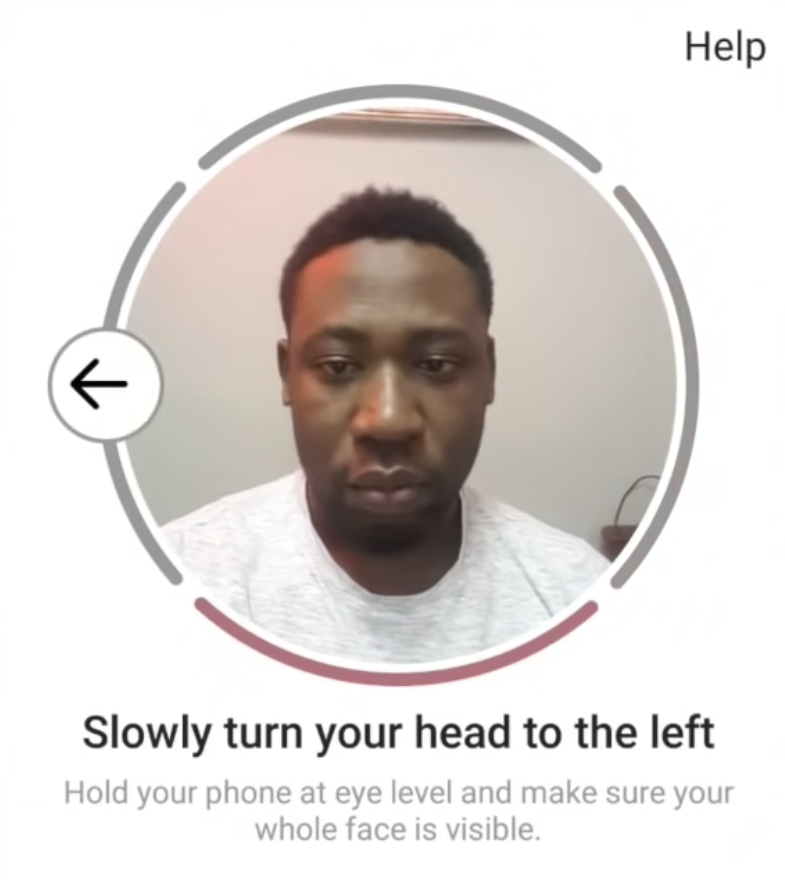

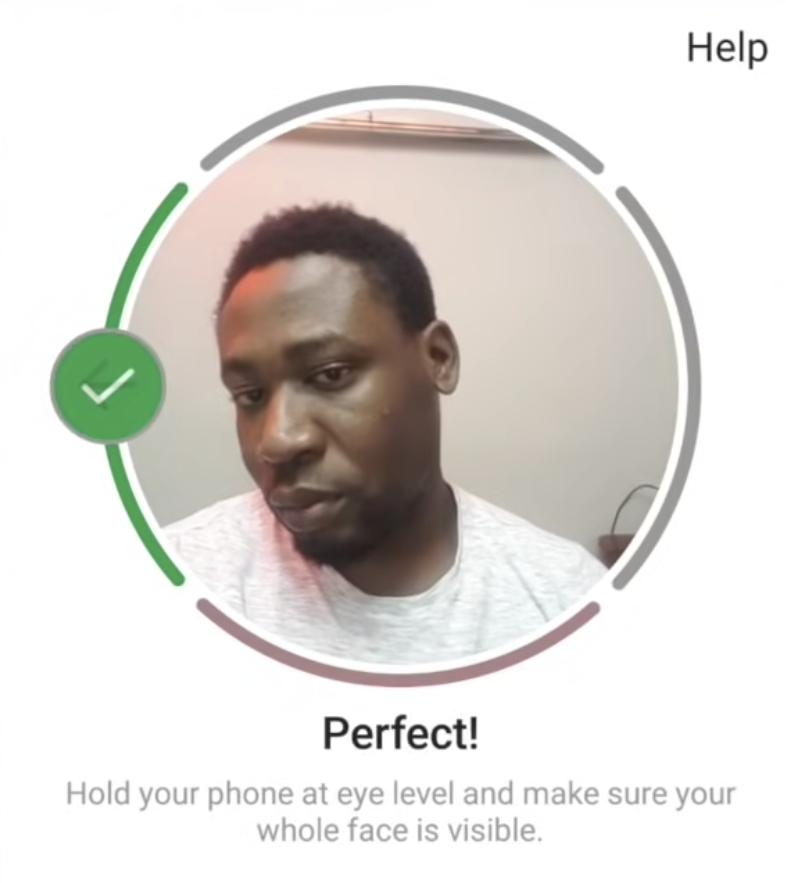

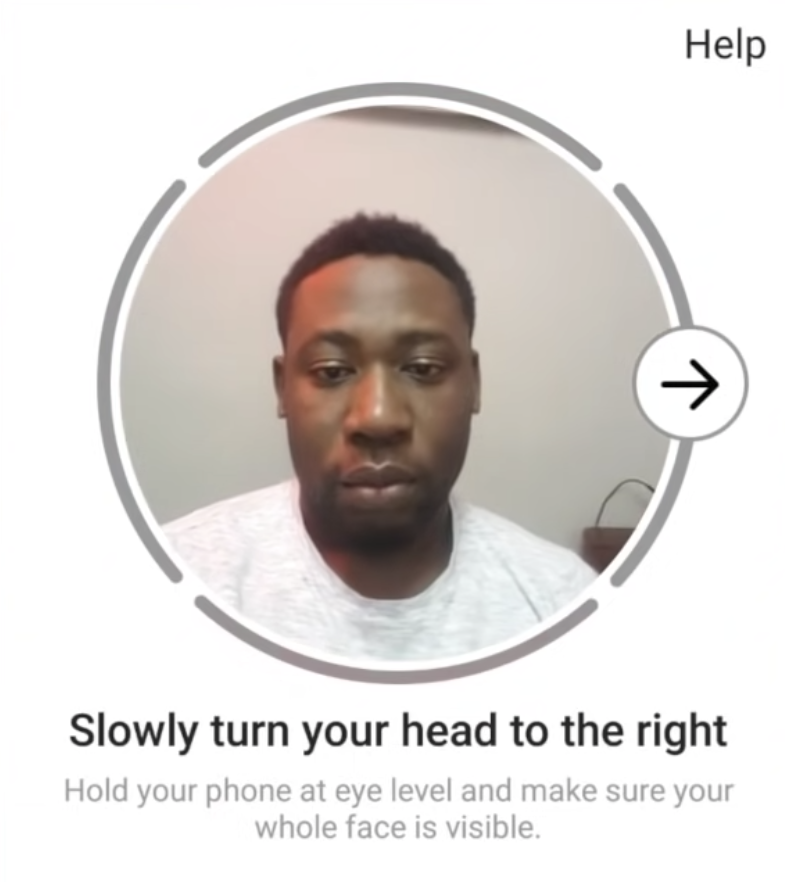

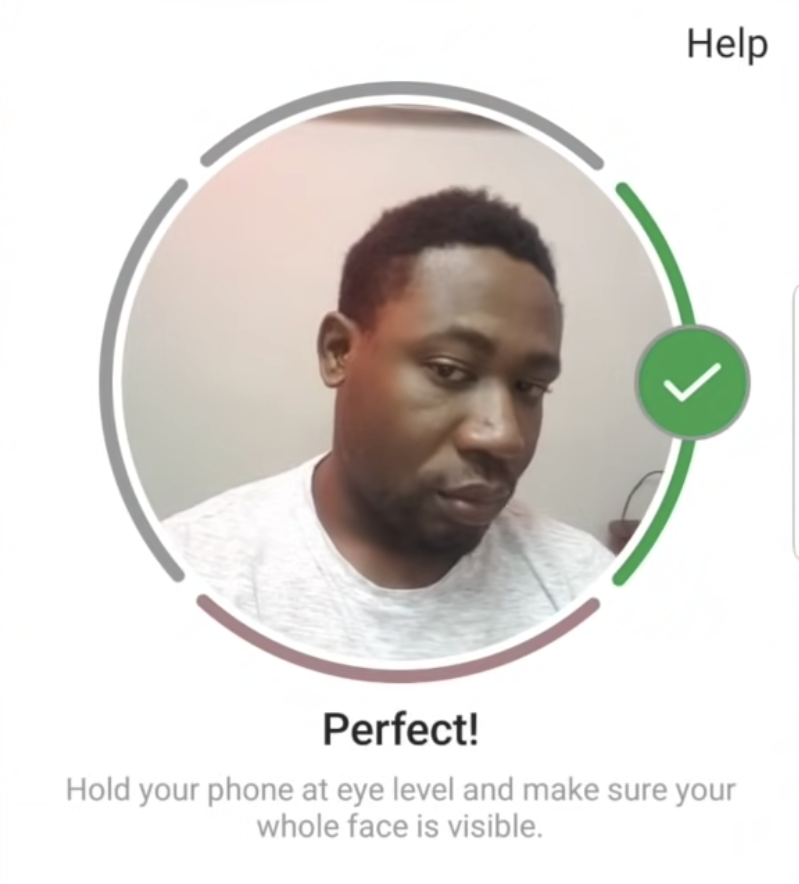

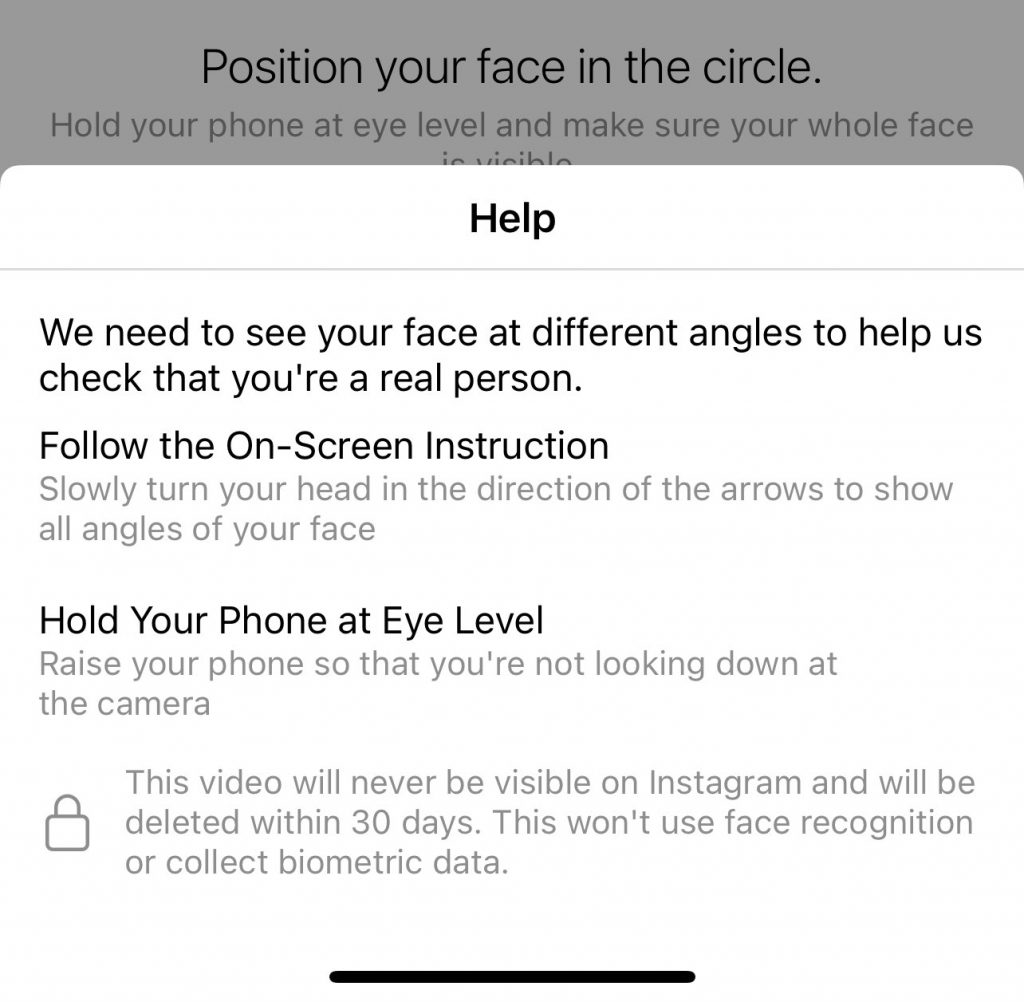

If you make a new Instagram account and they want to verify that you’re a real person, you will get a pop-up asking you to take a video selfie. You need to position your face in the circle and hold your phone at eye level. After that, just follow the on-screen instructions and move your head in the directions that they specify.

Once you’re done, you can submit the video selfie and Instagram will take a few seconds to automatically verify it.

Instagram fooled by a Barbie doll

User Alexander Chalkidis found that the human verification could by completed without a human at all. He fooled the Instagram feature with both a Ken doll and a Barbie doll. This probably means that Instagram does not actually use your biometrics to analyse your face, but just acts as more of a difficult CAPTCHA.

Given this flaw, it is theoretically possible to automate making Instagram accounts without the presence of a human, although Instagram might improve the human detection in a future update.

Can we trust Instagram and Meta?

Instagram insists that your privacy is being protected, saying “This video will never be visible on Instagram and will be deleted within 30 days. This won’t use face recognition or collect biometric data.” However, its parent company Meta (previously Facebook) has a terrible reputation when it comes to privacy. Also, the fact that they said the video “will be deleted within 30 days” implies that they are keeping your verification video for some reason.

Meta recently ended Facebook’s facial recognition feature, but they did not mention anything about Instagram. It is unclear whether Instagram is using your face to train their models or not.

The selfie verification system is not a new idea. Dating apps like Bumble and Tinder have been using similar methods for a long time. And when it comes to facial biometrics, AirAsia launched the FACES feature which turns your face into a boarding pass.

[ SOURCE, IMAGE SOURCE ]