In a new blog post, YouTube brought up its approach when it comes to protecting kids and families on its platform. Their approach is based on a framework around four “Rs”—remove harmful content, raise authoritative voices, reduce borderline content, and reward trusted creators.

“As kids and teens spend more time online, it’s important to protect their experience while encouraging exploration. Responsibility is our number one priority at YouTube, and nothing is more important than protecting kids,” wrote James Beser for YouTube.

The platform has already introduced YouTube Kids since 2015, which provides a version of YouTube for children. The service includes curated selections of content, parental control features, and filtering of videos deemed inappropriate viewing for children depending on their ages.

YouTube also gives parents the option to create a supervised account for their tween or watch together with their kids. But what else is YouTube doing to solidify its safety practices for kids and families?

Remove

YouTube says that it regularly reviews policies that “exploit or endanger minors” and it has “committed significant time and resources toward removing violative content as quickly as possible”. In Q2 of 2021 alone, it removed over 1.8 million videos for violations of our child safety policies—and that includes content meant to target young minors and families that contain sexual themes, violence, obscenity, or other mature themes.

It has also improved the YouTube Kids app, as it “raised the bar for which channels and videos” are allowed on the curated platform. For example, it has removed the content that “only focus on product packaging or directly encourage children to spend money”.

Raise

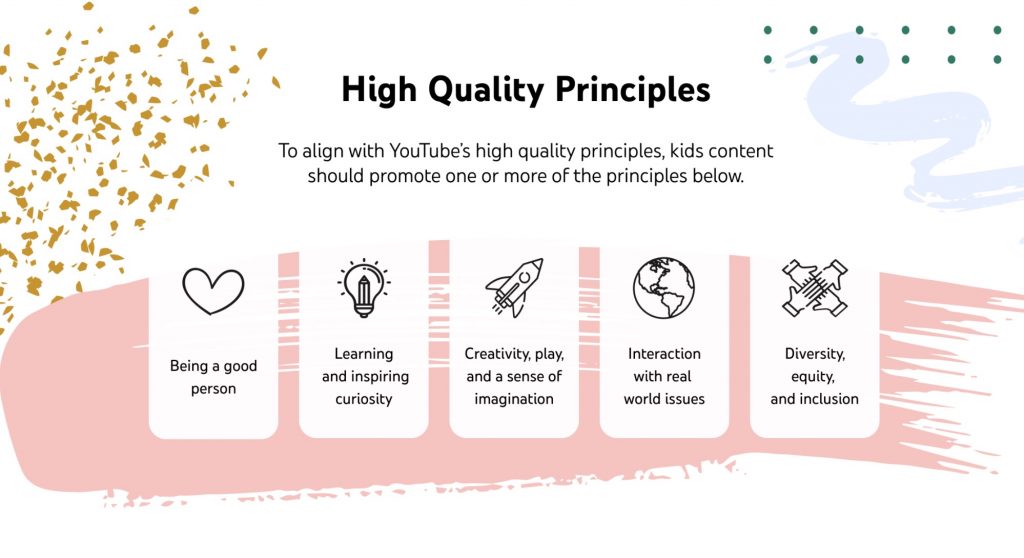

In trying to “raise authoritative voices”, YouTube decided on some principles that would help determine if a video should be promoted to kids. They include “being a good person”, “learning and inspiring curiosity”, “creativity, play, and a sense of imagination”, “interaction with real world issues”, and “diversity, equity, and inclusion”.

These principles are used to determine which content YouTube “raises up” in its recommendations. This means that when you’re watching “made for kids” content on YouTube, the videos should be “age-appropriate, educational, and inspire creativity and imagination”.

Reduce

Using the same principles, YouTube removes or “reduces” the content that doesn’t match the fit. Kids content that is “low-quality”, but doesn’t violate its Community Guidelines, would not likely show up on your recommendations. This includes videos that are heavily commercial or promotional, encourage negative behaviors or attitudes, and more.

Reward

According to YouTube, they are rewarding creators of kids and family content and are helping them grow and succeed on the platform. However, these channels are set to a “higher bar”, as they will need to deliver high-quality content and comply with kids-specific monetisation policies.

“Every channel applying to the YouTube Partnership Program undergoes review by a trained rater to make sure it meets our policies and we continually keep these guidelines current. We also regularly review and remove channels that don’t comply with our policies,” explained YouTube.

YouTube also said that it has reached out to “potentially impacted creators” if they have channels that have predominantly low-quality kids content. The changes are to take effect starting November.

While these practices are mostly to protect kids and families on YouTube Kids, they have had trouble with content on their regular platform, particularly with anti-vaccine content. In late September, YouTube announced that it has banned false content regarding vaccines, but some have regarded the action as “a little too late”.

[ SOURCE, IMAGE SOURCE ]