What will we do without Google? Its search engine has helped us find answers to almost any question we have, and it has become smarter and more refined as time progresses through new features. Google even announced several new features that could help “read your mind” during their Search On event.

One feature is a Google Lens tool that lets you search with both words and photos. Another main feature is a new “Things To Know” box that serves up answers to questions commonly related to your search. Both features are powered by a machine learning model called MUM, or Multitask Unified Model.

According to Google, MUM not only understands language—it also generates it by picking up information from formats beyond text like pictures, audio, and video. It’s also trained across 75 different languages and many different tasks at once, allowing it to develop a more comprehensive understanding of information and world knowledge than previous models.

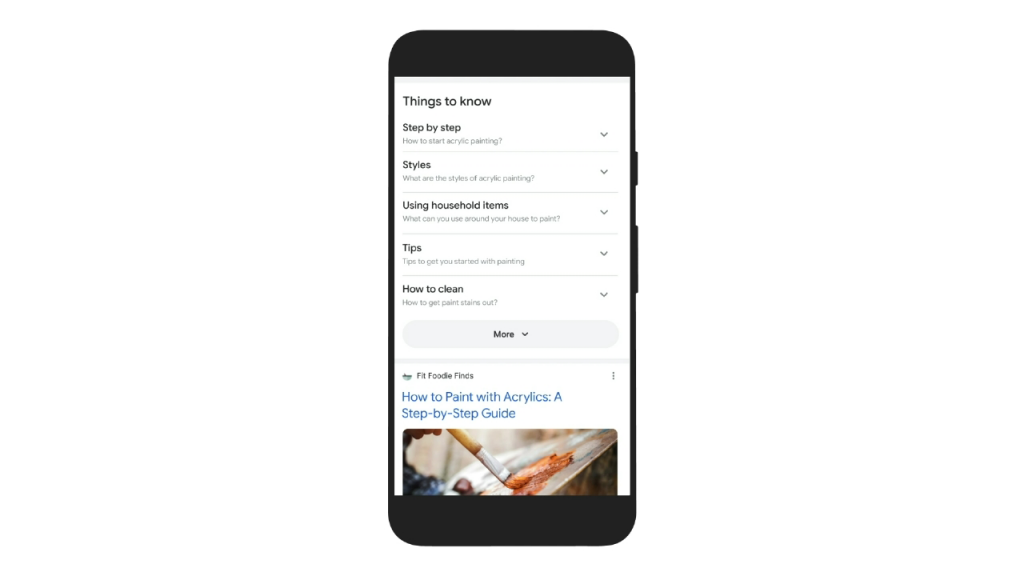

“Things To Know”

With their “Things To Know” box, Google will be able to include context outside of standard text-based search terms. This means that it can be helpful if you’re feeling stuck on what to search for—or which words or phrases to use.

If you’re searching for a term on Google, you’d be able scroll down to the “Things To Know” section where its AI will expand your search and lead you down a “rabbit hole”. For example, if you try to search for “acrylic paint”, the “Things To Know” section will surface a description of acrylic paint and where you can buy it—along with links for how to use acrylic paints, the styles associated with this type of painting, and tips on technique and how to get started with things around the house.

Basically, it will help search up what they think you’ll be searching next. The feature will be available “in the coming months”.

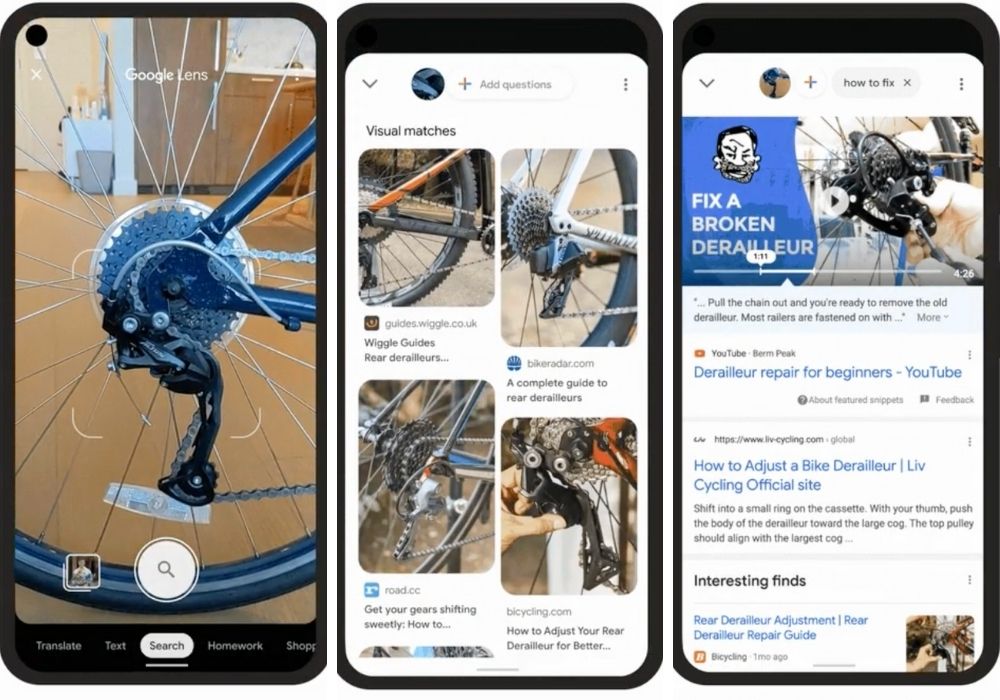

Google Lens search

Google Lens will be able to guess what you want to search for based on the picture you take with it. For example, you can take a picture of a bike with a broken Derailleur (because not everyone will know what a Derailleur is) through Google Lens and then type in “how to fix”—and Google will be able to identify what you mean and help search the results for you.

These features for Google Lens won’t be available until early next year, and it’s unclear if it will be in the standalone Google Lens app, or if it will be inside an Android phone’s default Camera app.

Google will also use machine learning to recognise moments in a video and identify topics related to the scene—like recognising a specific animal not mentioned in the video title or description. The feature will roll out “in the coming weeks”, with more “visual enhancements” in the coming months.

Other new features for the search engine will be options to “Refine this search” and “Broaden this search”—which will help you go beyond your original question, or narrow it down. These features will also roll out in the coming months.

[ SOURCE, IMAGE SEARCH ]