The SAND Lab (Security, Algorithms, Networking and Data) at University of Chicago developed Fawkes. Named after Guy Fawkes of ‘V for Vendetta’, Fawkes is an algorithm and software tool that gives users the ability to have their own images altered just enough to stump facial recognition systems.

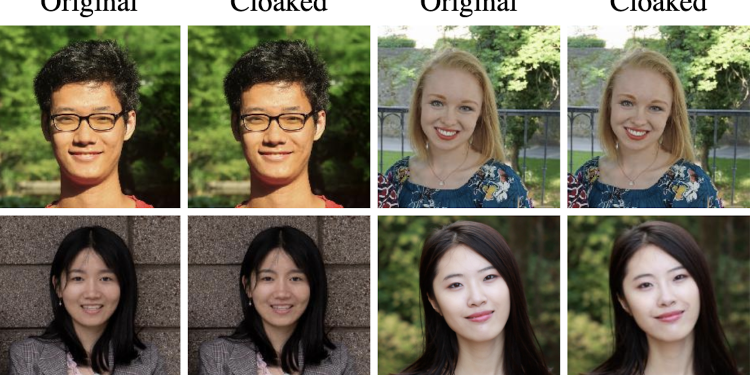

The changes that Fawkes makes to images are so small that altered photos look almost the same to human eyes. But the changes are still significant enough to prevent third-party facial recognition systems from identifying them.

Fawkes uses a process called ‘image cloaking’, which takes images and makes tiny, pixel-level changes to them that are invisible to the human eye. When you try to use ‘cloaked’ photos to build a facial recognition model, the distorted images will teach the facial recognition system an highly distorted version of what makes you look like you.

“In today’s world where big tech is becoming ubiquitous in our lives, it is clear that we cannot trust large companies to protect our privacy interests,” said Ben Zhao, professor at the University of Chicago and one of the researchers who developed Fawkes.

The researchers also say that Fawkes “provides 95+% protection against user recognition”. They also say the tool tested 100% effective against advanced facial recognition systems like Microsoft Azure Face, Amazon Rekognition and Face++.

Besides Fawkes, researchers from Facebook and Huawei is trying to alter key facial features in videos—even during live streams—in order to “decorrelate the identity.” Another trick that is found to fool algorithms is to wear specific accessories like hats and glasses, or to wear clothing with specific patterns.

You can try downloading Fawkes here to test it out. But when I tried downloading it, the software refused to load. However, Sandlab mentions that you should check back often for new releases and that you can contact them by email (you can find their contacts on the top of this page by clicking on their names).

[ SOURCE, IMAGE SOURCE ]