Most smartphone these days are pushing at least 12MP cameras and on the newer flagships, it is not uncommon to see devices with 64MP and 108MP cameras. The folks at Samsung is looking at opening up new possibilities for image sensors that can rival the human eye.

According to Yongin Park, the EVP and Head of Sensor Business Team, System LSI Business at Samsung, taking pictures or videos throughout the day has become a part of our normal lifestyle. The seamless experiences are possible thanks to the advancement of mobile photography which is enabled by image sensors.

He explained that our eyes are able to match a resolution of around 500MP. As a comparison, most DSLR cameras today come with sensors with 40MP resolution and he added that the industry has a long way to go to match human perception capabilities.

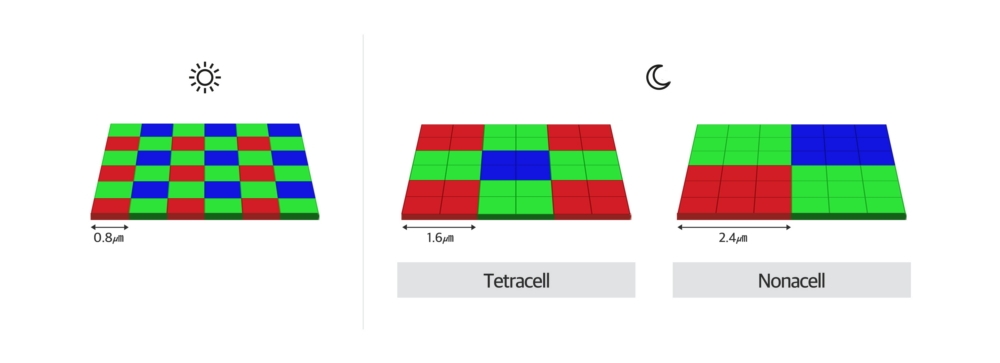

Although it is easy to put even more pixels onto a sensor, the massive size would make it impossible to fit it on a mobile device. The more sensible approach is to reduce the size of individual pixels so that the overall sensor size can be as compact as possible. However, smaller pixels would also limit the amount of light it receives and this would cause fuzzy and dull pictures.

Using Pixel Technologies to balance sensor size and light absorption

To enable high-resolution imaging on a compact sensor, Samsung has adopted several technologies that can be found on its 64MP and 108MP image sensors. Their latest 108MP ISOCELL Bright HM1 sensor that’s found on the Galaxy S20 Ultra contains 108 million pixels at 0.8-micron on a 1/1.33″ sensor.

Previously, Samsung had adopted a Tetracell technology which combines 4 nearby pixels in a 2×2 array to create a larger 1.6-micron superpixel. On the Bright HM1 sensor, the Nonacell technology combines 9 pixels in a 3×3 array to form an even larger 2.4-micron pixel. This dramatically improves performance in low-light situations. Samsung also claims to be the first to produce image sensors based on 0.7-micron pixel with its ISOCELL Slim GH1 43.7MP sensor.

Yongin Park also shared that cameras can only take pictures that are visible to the human eye at wavelengths between 450 to 750 nanometers (nm). However, imaging sensors that can detect light wavelengths beyond that range can be used for various applications. This includes ultraviolet light perception for diagnosing skin cancer while infrared image sensors are used for efficient quality control in agriculture and other industries.

Sensors that make invisible visible

Apart from image sensors, Samsung is also looking at developing other sensors that can register smells or tastes. Yonging Park says sensors that go beyond human senses will soon become an integral part of our daily lives which helps to make the invisible visible. This would also help people to go beyond what our own senses are capable of.

The Korean company also aims to produce 600MP imaging sensors in the future which can capture more detail than the human eye. At the moment, image sensors are primarily used on smartphones but it will soon be expanded into other fields such as autonomous vehicles, IoT and drones.

[ SOURCE ]