Instagram is rolling out a feature that will notify users when their captions on photos and videos are considered offensive—this will allow users a chance to “pause and reconsider their words”, according to the company.

This is the latest move by the Facebook-owned company against “online bullying”, and is similar to a feature rolled out earlier this year that uses AI to warns users about potentially offensive comments before they’re posted.

That’s because the results have been “promising”, and these warnings supposedly “encourage people to reconsider their words when given a chance”.

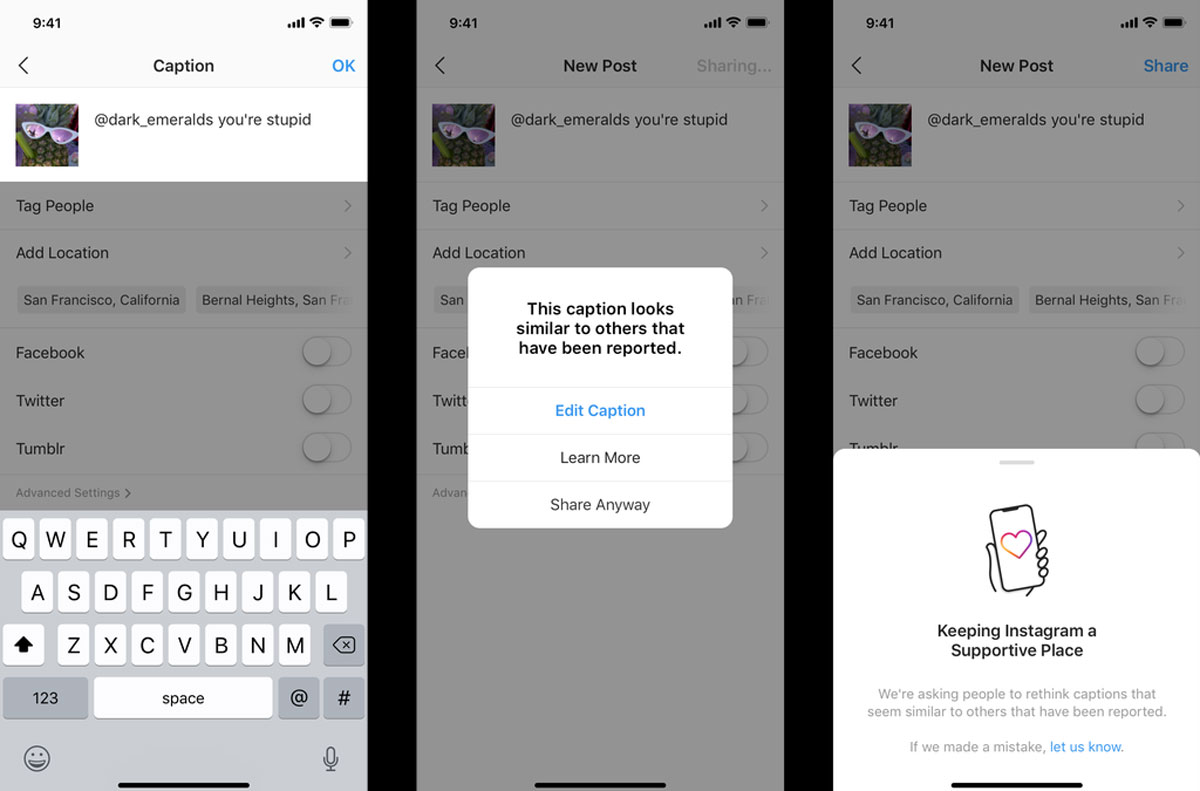

The way it will work, machine learning technology will prompt users if a caption is detected as “potentially offensive” while the caption is being written.

While the feature doesn’t actually stop a user from posting a comment—even if it’s flagged as offensive—it’s an interesting feature that could help to cultivate a healthier culture with regards to social media comments and online harassment.

Instagram also rolled out a feature earlier this year that lets users “shadow ban” certain accounts—which basically allows certain accounts to be blocked, without them finding out.

While many cynics out there may find these features to be somewhat redundant, I’d argue that it’s still a move in the right direction. With social media platforms increasingly utilised for children, perhaps the new AI-powered features offer educational value as well.

“As part of our long-term commitment to lead the fight against online bullying, we’ve developed and tested AI that can recognize different forms of bullying on Instagram.”

The new feature will only be rolled out today in select countries, although a global release is expected in the coming months.

[ SOURCE ]