YouTube is powerful. People sink hours and hours of their lives into this platform daily for entertainment, information and distraction. It’s also an easy way for parents to keep their children occupied when they’re busy with work.

But how sure are you that the videos your child might find on YouTube are safe for their consumption? Well, I did some digging and it turns out that even the most innocent of searches can lead you to some truly disturbing content.

You may have heard of #ElsaGate which discusses the issue that pedophiles are using YouTube to “groom” children with misleading videos (which get millions of views) and that YouTube is turning a blind eye to the matter. While I haven’t found conclusive evidence to prove that it’s entirely true, my research did produce some alarming results.

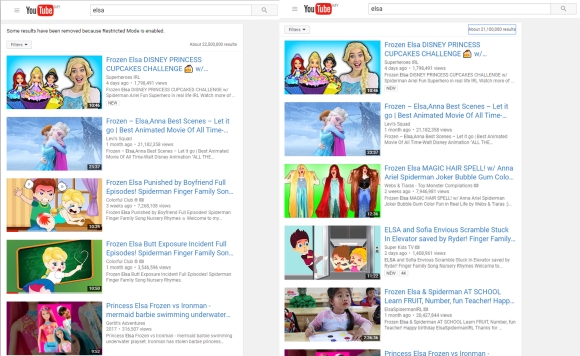

By simply searching YouTube with the keyword “Frozen” or “Elsa”, I found that the results were dominated by poorly animated videos with downright disturbing thumbnails and titles. These videos disguised themselves as “kid-friendly” but had animated elements of violence, pregnancy and sex in them.

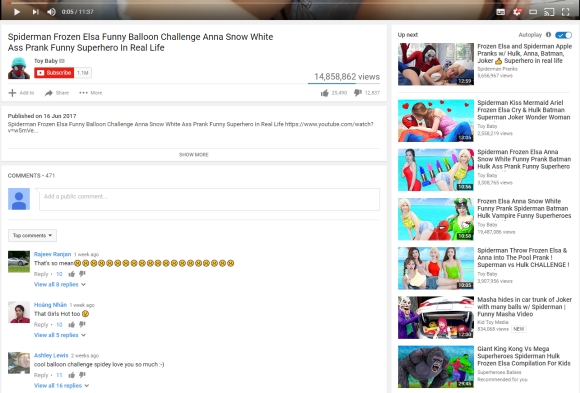

Things got even worse when I clicked into one of the videos and saw that the recommended videos section had thumbnails containing scantily clad women in sexually suggestive poses, violence involving popular cartoon characters and a bunch more weird stuff. Scrolling through the comments, you will also notice that these videos are filled with what seems a lot like “bots” designed to automatically leave nice comments.

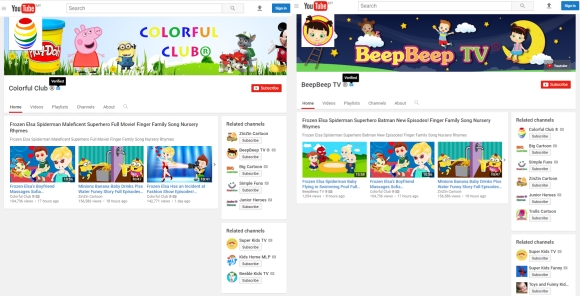

The worst part is that many of these videos were uploaded by verified YouTube channels with seemingly innocent names like Colourful Club and BeepBeep TV. Being verified on YouTube means that the channel belongs to an “established creator or is the official channel of a brand, business, or organisation” which lends an appearance of credence to the channel. These videos are also easy to find because their titles have been optimised to hit as many keywords as possible (including Elsa, Spiderman and Nursery Rhymes, etc.).

What’s more, I also found this video which found a connection between multiple YouTube channels where several different videos which shared an identical scene albeit with different animations:

However, as I dug further into this whole #ElsaGate situation, I found that it wasn’t just limited to videos about Elsa and Spiderman. There are also videos of children acting out scenes where they eat excrement-like substances out of miniature toilet bowls.

Sure, adults would know that they aren’t actually eating excrement, but do you think a toddler or pre-schooler will be able to tell the difference? Besides, just because we know it isn’t real poop, does it make the video any better? I mean, we know that blood violence in movies are fake so why do violent movies still have age restrictions?

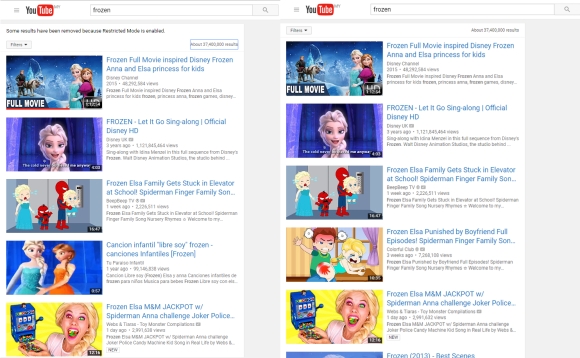

At this point, you must be thinking about the fact that YouTube has a “Restricted Mode” right? A restricted mode to filter out all the potentially harmful content that I highlighted above. Well, take a look at a comparison between a search with Restricted Mode switched on (via my account) and one with it left off (without an account logged in):

Yep, some stuff may be filtered out, but a large majority of it is still being displayed. Remember that poop-eating video I highlighted earlier? It still shows up even if Restricted Mode is on.

We reached out to Google Malaysia to get their comments on this matter and this was their response:

That’s the main reason we launched YouTube Kids (available in Malaysia since October 2016). We feel that YouTube Kids is the perfect platform for kids and minors to safely explore online content. Our goal at YouTube is to open up a world of knowledge and information in a safer and easier way for today’s kids and parents/guardians. Homegrown Malaysian creators are very much present on YouTube Kids including: Les’ Copaque Productions, Digital Durian, Animonsta, LTKO, etc.

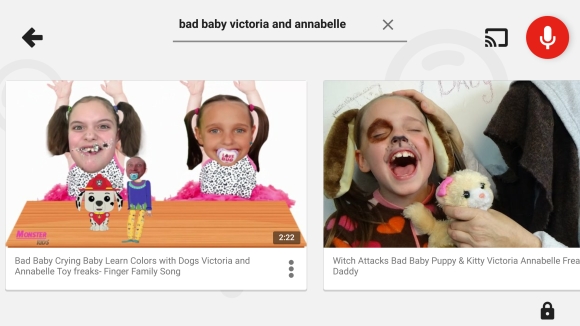

Yes, Google introduced YouTube Kids last year to help parents filter the garbage from the good and for the most part I think it works. It’s certainly more strict with the content than YouTube (even with Restricted Mode on) is, but it does not mean that the app is fully free from disturbing content. Just a few minutes with the app and I stumbled upon this video:

Not technically unsafe for kids because it doesn’t have the sexual, vulgar or gory themes you’d usually associate with non kid-friendly videos but there’s no denying that it’s disturbing. And that’s not all, remember the poop-eating video I shared earlier? Well, the good news is that the YouTube Kids app did filter that particular video out, but other videos from that channel still surface when you search for them.

How do you keep your kids safe?

As a busy parent, I get the appeal of simply launching the YouTube or YouTube Kids app and then handing your mobile device over to your child. It can keep them occupied for hours on end and for the most part YouTube does a good job at filtering out sexual, gory or vulgar content. It’s easy to assume that as long as a video doesn’t contain those themes, they’re automatically kid-friendly.

But as we’ve discovered, that’s simply not the case. There are far more subtle ways to poison your child’s malleable mind, ways that would be invisible to a pre-programmed filtering system. These channels have found a way to abuse a loophole in YouTube’s filtering system (as well as their SEOs) and are uploading some truly shocking content under the guise of being kid friendly. At a glance, these videos seem innocent, but when you actually sit down and watch them, you’d be appalled by how disturbing some of them are.

What I find peculiar is that, being part the all-knowing search engine giant that Google is, with all of its resources, information and knowledge, why hasn’t YouTube shut this down yet?

That said, there are measures that you as a parent can take to make sure your child doesn’t become victim to these toxic videos. Although imperfect, the YouTube Kids app is still the most solid option a parent can use to filter out the worst kinds of content. It blocks the major stuff and sometimes that’s good enough.

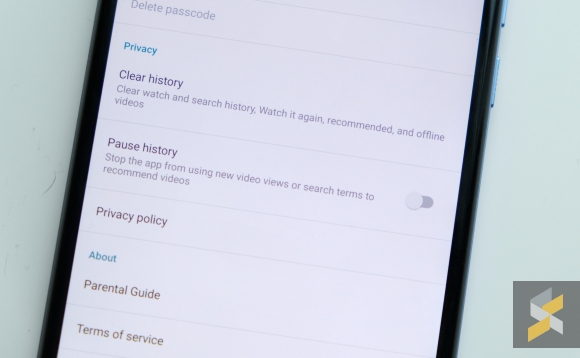

However, if you really want to make sure your kids are watching what you feel is right for them, you will need to spend some time to scan through the videos. You can also switch off the search function within the YouTube Kids app so your kids won’t accidentally find themselves watching unsavoury content. There’s also an option to stop the app from using video views and search terms to recommend new videos which helps keep the circle tight.

You should also navigate to credible kid-friendly channels, like Didi and Friends, so you can be sure that your kid is getting wholesome content. And if you ever stumble upon unsafe videos in the app, don’t forget to flag and block the video so YouTube knows you don’t want to see any of that. In fact, Google Malaysia even encourages you to do so:

We’re always looking to improve the YouTube experience for all our users and that includes ensuring that our platform remains an open place for self expression and communication. As a platform we strive to serve these varying interests by asking our community to flag any video that violates our strict community guidelines.”

It does look like Google doesn’t want to be so hands on with content policing and prefers to leave it to the viewers instead. That’s a pretty big difference in policy compared to what a site like Facebook does.

Although it does seem like Google’s approach will keep content democratised, it’s worth noting that YouTube as a platform is a lot more silent about how they deliver content to their users compared to Facebook. Sure, Facebook is notorious for changing the way our Timelines work, but at least they’re open about it and they are open to community feedback.

YouTube, on the other hand, has always been very silent on their changes to the content recommendation algorithm, to the point where even creators are frustrated with YouTube.

(Interestingly, these videos are all unavailable if Restricted Mode is enabled)

I think, at this point, YouTube needs to start being more transparent with what they’re doing. They need to listen to the community more and tell us what they’re doing to their sorting algorithm. In the case of these disturbing videos, I don’t think it’s very hard to tell that this content isn’t the most appropriate thing for kids. Maybe YouTube can leave a pop-up at the start of the video warning parents that the content might be inappropriate and asking if they’re sure they want to continue.

They can also promote more quality content creators on the YouTube Kids app — like Digital Durian, Les’ Coapque, Animonsta, etc. — or give parents the ability to lock their app to a certain pool of content creators. YouTube clearly has the power to do so, just look at how efficient they are at taking down videos (and channels) for copyright infringement. If they can be so strict with copyright infringement, why are they so lenient with this?

For now though, you as a parent must put in the effort to go through the videos yourself, otherwise, who knows what garbage video your child might end up watching.